Joint Learning of Words and Meaning Representations for Open-Text Semantic Parsing

2022. 7. 23. 00:36

- -

Joint Learning of Words and Meaning Representations for Open-Text Semantic Parsing

💡들어가기 전 개념 정리

- Semantic parsing

- 자연어 발화(NLU)를 기계가 이해할 수 있는 formal meaning representation(MR)로 변환하는 것

-> a~c: NLU, d: MR

- MR(meaning representation)

- 언어적 input의 의미를 포착하는 형식적 구조(formal structure)

- 미묘한 언어적 뉘앙스와 세상에 대한 비언어적 상식 사이의 다리(bridge)라고 할 수 있음

- ex) 상대방이 나를 칭찬한 건지 욕한 건지 아는 방법

- -> 언어적 input(상대방의 말)을 meaningful structure로 분해하고, 이를 실제 세계에 대한 지식(상대방에 대한 정보, 상대방과의 관계, 이전 경험 등)과 연결함으로써 상대방의 의도를 알 수 있음

- meaning representation 방식

- First Order Logic(1차 논리)

- Abstract Meaning Representation(AMR) using a directed graph

- Abstract Meaning Representation(AMR) using the textual form

- Frame-Based or Slot filter representation

- → 이 4가지 방식 모두 meaning representation은 대상에 해당하는 구조, 속성과 대상들 간의 관계(relation)로 구성된다는 점을 공유함

- representation

- 실제 텍스트를 언어 모델이 연산할 수 있도록 만든 형태

- 등장 횟수 기반과 분포 기반으로 나눠짐

Abstract

- Open-text semantic parser는 MR(meaning representation)을 추론해 자연어의 모든 문장을 해석하도록 설계됨

- 대규모 시스템들은 지도 학습 데이터의 부족 때문에 쉽게 machine-learned(기계 학습)되기 힘듦

- 논문에서는 WordNet과 같은 knowledge base learning과 원시 텍스트(raw text)를 사용한 learning을 결합한 training scheme 덕에 광범위한 텍스트(40,000개 이상의 entity에 매핑된 70,000개 이상의 단어 사전 사용)에 MR을 할당하는 방식을 제안

- WordNet

- 영어의 의미 어휘 목록

- 영어 단어를 'synset'이라는 유의어 집단으로 분류해 간략하고 일반적인 정의를 제공하고, 이러한 어휘 목록 사이의 다양한 의미 관계를 기록

- => 자연어 처리를 위해 특화된 사전

- WordNet

- 논문의 모델은 다양한 데이터 소스에서 작동하는 multi-task training process를 통해 단어, entity, MR의 표현을 공동으로(jointly) 학습

- Multi-Task Learning

- 연관있는 task들을 연결시켜 동시에 학습시킴으로써 모든 task에서의 성능을 전반적으로 향상시키려는 학습 패러다임

- 많은 labeled data가 필요한데 데이터를 확보하기 어려운 경우 Multi-task learning이 좋은 해결 방법이 될 수 있음

- 인간이 새로운 것을 학습할 때 이전에 학습했던 유사경험에 접목시켜 더 빨리 학습하는 것에서 영감을 얻은 방식

- https://velog.io/@riverdeer/Multi-task-Learning

- Multi-Task Learning

- 하나의 프레임워크에서 semantic parsing의 맥락 내에서 knowledge acquisition과 word-sense disambiguation를 위한 방법들을 제공

- knowledge acquisition

- 지식 습득

- 지식 기반 시스템에 필요한 규칙과 온톨로지를 정의하는 데 사용되는 프로세스

- https://en.wikipedia.org/wiki/Knowledge_acquisition

- word-sense disambiguation(WSD): 단어 의미 중의성 해소

- 해당 문맥에서 특정 단어가 사전적 의미 중 어디에 해당하는지 찾아내는 작업

- ex) 1번 밤->밤01, 2번 밤->밤02, 3번 밤->밤01 처럼 각각의 단어에 대해 사전 상의 의미와 연결 지음

- 해당 단어의 의미를 사전의 각 의미와 연결하는 작업이 필수적이기 때문에 사전 자료나 기타 지식 기반 데이터베이스를 필요로 함

- 따라서 대게 지식 기반 방법(Knowledge-based approach)이나 지도 학습 방법(Supervised approach) 사용

- https://bab2min.tistory.com/576

- knowledge acquisition

Introduction

semantic parsing에 관한 연구는 대략 2개의 트랙으로 나눌 수 있음

- 1) in-domain

- 고도로 진화되고 포괄적인 MR을 구축하기 위한 학습이 목표

- 이는 고도로 annotated(주석이 달린)된 train 데이터 와/또는 하나의 도메인을 위해서만 구축된 MR을 필요로 하기 때문에 이러한 접근 방식은 보통 제한된 어휘(몇백 개의 단어)와 그에 따라 제한된 MR representation을 가짐

- 2) open-domain 또는 open-text

- 모든 종류의 자연어 문장에 MR을 연관시키기 위한 학습이 목표

- 심층적인 의미 구조를 포착하는 MR로 많은 양의 free text에 레이블을 지정하는 것이 불가능하기 때문에 더 weak한 supervision(분류)

- 그 결과, 모델은 더 심플한 MR을 추론함 → shallow semantic parsing이라고도 함

- 논문에서는 open-domain에 대해 다룸

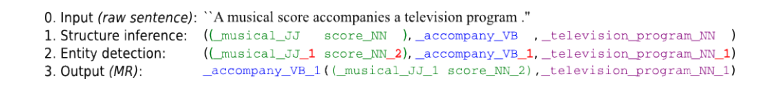

- 주어진 문장에 대해 2단계로 MR을 추론함

- (1) semantic role labeling step → 의미 구조 예측

- (2) disambiguation step → 학습된 energy function을 최소화하기 위해 각각의 관련 단어에 해당 entity 할당

- strong supervision의 부족을 방지하기 위해 여러 소스의 데이터로 훈련된 energy-based model로 구성됨

- energy-based model

- 데이터 분포 내에 있는 입력 X에 낮은 에너지, 그 외의 입력에 높은 에너지를 주는 energy function을 학습해 데이터가 존재하는 부분의 분포를 최대화하는 것뿐만 아니라 데이터가 존재하지 않는 부분의 분포를 낮추는 생성 모델

- https://post.naver.com/viewer/postView.naver?volumeNo=31743752&memberNo=52249799

- energy-based model

- 논문의 energy-based model은 단어, entity, 그리고 이들의 조합들 간의 의미 정보를 공동으로(jointly) 포착하도록 학습됨

- 각 symbol에 대해 저차원 임베딩 벡터가 학습되는 distributed representation으로 인코딩

- distributed representation: 분포 기반의 단어 표현

- 타겟 단어 주변에 있는 단어 기반으로 벡터화

- '비슷한 위치에서 등장하는 단어들은 비슷한 의미를 가진다'라는 분포 가설에 기반해 주변 단어 분포를 기준으로 단어의 벡터 표현이 결정되기 때문에 분산 표현(distributed representation)이라고 부름

- ex) Word2Vec, fastText

- distributed representation: 분포 기반의 단어 표현

- 논문의 semantic matching energy function은 그럴듯한 조합에 낮은 에너지 값을 할당하기 위해 이러한 임베딩을 blend 하도록 설계됨

- WordNet, ConceptNet과 같은 리소스는 entity 간의 관계 형태로 상식(common-sense knowledge)을 인코딩 하지만(ex: ~has ~part( ~car, ~wheel) ) 이 지식을 원시 텍스트 (문장들)에 연결하지 않음

- 반면, Wikipedia와 같은 텍스트 리소스는 entity를 기반으로 하지 않음

- 논문의 학습 절차는 여러 데이터셋에 대한 multi-task learning을 기반으로 함

- 이런 방식을 사용하면 텍스트와 entity 간의 관계에서 유도된 MR은 동일한 공간에 embedded(그리고 integrated)됨

- 이를 통해 많은 양의 indirect supervision과 적은 양의 direct supervision을 사용해서 원시 텍스트에 대해 disambiguation(명확화)을 수행하는 방식을 학습할 수 있었음

- 모델은 단어에 대한 올바른 WordNet sense(의미)를 선택하도록 상식(common-sense knowledge) (ex. entity 간의 WordNet relation)을 사용하는 것을 학습함

- open-text semantic parsing을 위한 standard evaluation(표준 평가)가 존재하지 않아서 모델 평가를 위해 다른 평가 방식 사용

- 결과는 두 가지 벤치마크인 WSD(word sense disambiguation)과 (WordNet) knowledge acquisition을 고려함

- 원시 텍스트로 multi-tasking을 수행해서 WordNet에 존재하지 않는 새로운 common-sense relations를 학습하는 것인 knowledge extraction을 수행할 수 있는 가능성도 입증함

Semantic Parsing Framework

2.1 WordNet-based Representations (MRs)

- semantic parsing을 위해 고려한 MR은 $REL(A_0, . . . , A_n)$ 형식의 간단한 논리식

- $REL$: relation symbol

- $A_0, ..., A_n$: arguments

- 논문에서는 open-domain 원시 텍스트를 구문 분석하기를 원하므로 많은 relation types와 arguments를 고려해야 했음

- $REL$과 $A_i$ arguments를 정의하기 위해 WordNet 사용

- WordNet은 synset라고 불리는 node가 의미(sense)에 해당하고, edge가 이러한 의미들 사이의 관계를 정의하는 그래프 구조 안에서 comprehensive knowledge(포괄적인 지식)을 포함함

- synset: 유의어 집단

- synset은 일반적으로 8-digits codes로 식별되지만 명확성을 위해 논문에서는 synset을 단어 + 품사 태그(POS tag - NN: 명사, VB: 동사, JJ: 형용사, RB: 부사) + 숫자( 몇 번째 의미인지)로 표현함

- ex)

- _score_NN_1: 명사 "score"의 첫 번째 의미를 나타내는 synset. "mark"와 "grade"라는 단어도 포함 ⇒ 점수

- _score_NN_2: 명사 "score"의 두 번째 의미 ⇒ 악보

- ex)

- triplets $(lhs, rel, rhs)$을 사용해서 WordNet의 relations instances를 나타냄

- $lhs$: relation의 왼쪽(left-hand side)

- $rel$: relation의 type

- $rhs$: relation의 오른쪽(right-hand side)

- ex)

- (_score_NN_1, _hypernym, _evaluation_NN_1)

- (_score NN_2, _has_part, _musical_notation_NN_1)

- hypernym: 상의어

- 낱말들 중에서 낱말이 다른 낱말을 포함하는 경우

- <-> 하의어: 상의어에 포함되는 낱말

- ex) 상의어: 악기, 하의어: 피아노

- has_part: 전체에서 부분으로

- 최종 MR의 경우, $REL$ 과 $A_i$ arguments를 WordNet synsets의 튜플로 표시

- → $REL$은 아무 동사나 다 될 수 있고, 18개의 WordNet relations 중 하나로 제한되지 않음

2.2 Inference Procedure (추론 절차)

- step 0) input

- step 1) 전처리 (lemmatization, POS, chunking, SRL)

- step 2) 각 lemma(표제어)가 해당하는 WordNet synset에 할당됨

- step 3) 완전한 MR(meaning representation) 정의

텍스트 전처리 과정(lemmatization, POS, chunking, SRL)

- lemmatization: 표제어 추출

- 기본 사전형 단어 형태로 변환

- 복수 -> 단수, 동사 -> 타동사

- POS tagging: 품사 태깅

- chunking = shallow parsing

- 여러 개의 품사로 구(pharase)를 만드는 것

- 문장을 각 품사로 구분하고, chunking에 의해 구로 구분하면 문장의 의미를 파악하기 용이해짐

- SRL: 의미역 결정(Semantic Role Labeling)

- 논문에서의 semantic parsing은 두 단계로 구성됨 → step 1)과 step2)

Step (1): MR structure inference

- 텍스트를 전처리하고, MR의 구조를 추론하는 단계

- 이 단계에서는 이미 존재하는 표준 방식 사용

- SENNA software를 사용해서 POS tagging, chunking, lemmatization, semantic role labeling(SRL) 수행

- 논문에서는 표제어 추출된(lemmatized) 단어와 POS tag의 연결을 ‘lemma’라고 표현함

- lemma: 표제어

- lemma와 synset을 구별하는 정수 접미사가 없는 것에 주의 → lemma가 의미상 모호할 수 있음

- SRL는 각 proposition에 대한 동사와 관련된 각각의 grammatical argument에 semantic role label을 할당하는 것

- → MR의 구조를 추론하는 데 사용되기 때문에 중요

- 논문에서는 (subject_주어, verb_동사, direct object_직접목적어)의 템플릿과 일치하는 문장들만 고려함

- 이 3가지 요소들은 표제어 추출된 단어들의 튜플(→ multi-word phrase)과 관련됨

- SRL은 문장을 ($lhs$ = subject, $rel$ = verb, $rhs$ = object)의 템플릿으로 구조화하는 데 사용됨

- 원시 텍스트에서 순서가 반드시 주어/동사/직접 목적어일 필요는 없음 → ex) 수동태 문장

- semantic parse(또는 MR)을 완료하기 위해서는 lemma가 반드시 synset으로 변환되어야 함 -> step (2)의 disambiguation

Step (2): Detection of MR entities

- 두 번째 단계의 목표는 문장에 표현된 각각의 semantic entity를 식별하는 것

- 각 요소가 lemma의 튜플과 관련된 relation triplet $(lhs^{lem}, rel^{lem}, rhs^{lem})$이 주어지면 lemma가 synset로 대체된 corresponding triplet $(lhs^{syn}, rel^{syn}, rhs^{syn})$이 생성됨

- lemma에 따라 간단하거나

- _television_program_NN 또는 _world_war_ii_NN과 같은 일부 lemma는 단일 synset에 해당

- 매우 어려울 수 있음

- _run_VB는 33개의 다른 synset에, _run_NN은 10개의 synset에 매핑될 수 있음

- 그래서 논문에서 제안한 semantic parsing framework에서는 MR이 $rel^{syn} (lhs^{syn}, rhs^{syn})$ 형식으로 재구성될 수 있는 synsets의 triplets인 $(lhs^{syn}, rel^{syn}, rhs^{syn})$ 에 해당함

- 모델이 relation triplets를 중심으로 구성되어 있기 때문에 MR과 WordNet relations는 동일한 scheme으로 보내짐

- ex) WordNet relation ( _score_NN_2 , _has_part, _musical_notation_NN_1) 는 WordNet relation type _has_part 가 동사의 역할을 하는 MR과 동일한 패턴에 fit함

Semantic Matching Energy

- 이 논문의 main contribution

- -> lemma와 WordNet entity들을 동일한 벡터 공간에 임베드하는 데 사용한 energy function

- semantic matching energy function은 lemma가 주어진 적절한 synset을 예측하는 데 사용됨

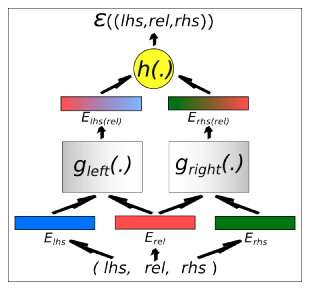

3.1 Framework

key concepts

- 1) symbolic entities (synsets, relation types, lemmas)라고 불리는 것들은 모두 neural language model의 이전 작업에 따라 "임베딩 공간"이라고 하는 공동의 d-차원 벡터 공간과 관련됨

- 이 벡터들은 모델의 parameter들이며 semantic parsing 작업에서 잘 수행되도록 공동으로 학습됨

- 2) 특정 triplet $(lhs, rel, rhs)$과 관련된 semantic matching energy value는 모든 symbol을 그들의 임베딩에 매핑하는 것으로 시작하는 매개 변수화된 함수 $ε$에 의해 계산됨

- $ε$는 variable-size arguments도 처리할 수 있어야 함

- 3) energy function $ε$는 가능한 다른 symbols의 configurations 보다 training 예제에 대해 더 낮게 최적화됨

- 따라서 lemma에 대한 가장 그럴듯한 의미를 선택하기 위해서 semantic matching energy function이 entity의 그럴듯한 조합과 그럴듯하지 않은 조합을 구별할 수 있음

3.2 Parametrization

- 1) 튜플 $(lhs, rel, rhs)$의 triplet은 먼저 각각의 임베딩인 $E_{lhs}$, $E_{rel}$, $E_{rhs}$에 매핑됨

- 하나 이상의 symbol을 포함하는 튜플에 대해 집계 함수를 사용해서

- 2) $E_{lhs}$와 $E_{rel}$는 $g_{left}(.)$를 사용해서 결합되어 output으로 $E_{lhs(rel)}$를 출력

- $E_{rhs(rel)} = g_{right}(E_{rhs}, E_{rel})$

- 3) $ε((lhs, rel, rhs))$ 에너지는 $E_{lhs(rel)}$와 $E_{rhs(rel)}$를 $h(.)$ 함수와 합쳐서 얻어짐

- semantic matching energy function은 병렬 구조(parallel structure)를 가짐

- 먼저, $(lhs, rel)$과 $(rel, rhs)$ 쌍이 따로따로 결합

- 그런 다음, 이러한 semantic combinations가 매치됨

3.3 Training Objective

- $C$: 모든 entity들(relation types, lemmas, synsets)을 포함한 dictionary

- $C^∗$: 요소들이 $C$에서 취해진 튜플(또는 시퀀스)의 집합

3.4 Disambiguation of Lemma Triplets

- disambiguation: 명확화

- semantic matching energy function은 Step (2): Detection of MR entities를 수행하기 위해 원시 텍스트에 사용됨

- → 즉 word-sense disambiguation 단계를 수행하기 위해 사용되는 것

- lemma의 triplet $((lhs_1^{lem}, lhs_2^{lem}, . . .),(rel_1^{lem}, . . .),(rhs_1^{lem}, . . .))$은 한 번에 하나의 lemma씩 greedy 방식으로 synsets와 레이블 됨

- greedy 알고리즘

- 현재 상황에서 가장 좋은 것(최선의 선택)을 고르는 알고리즘

- https://velog.io/@contea95/%ED%83%90%EC%9A%95%EB%B2%95%EA%B7%B8%EB%A6%AC%EB%94%94-%EC%95%8C%EA%B3%A0%EB%A6%AC%EC%A6%98

- greedy 알고리즘

- 예를 들어, $lhs_2^{lem}$를 라벨링 하려면 triplet의 나머지 모든 요소를 lemma들로 고정하고, 가장 낮은 에너지로 이어지는 synset을 선택함

- $C(syn|lem)$: $lhs_2^{lem}$이 매핑될 수 있는 허용된 synset의 집합

- 이걸 모든 lemma들에 대해 반복

- 논문에서는 항상 lemma를 context로 사용함 (이미 할당된 synset는 절대 사용하지 않음)

- 이 방식은 문장의 각 위치에 대해서 lemma의 의미들의 개수와 동일한 적은 수의 에너지만 계산하면 되므로 효율적인 프로세스임

- 하지만 이 방식은 이 중요한 단계를 수행하기 위해서 공동으로 함께 사용되기 때문에 synset과 lemma에 대한 good representations( = 좋은 임베딩 벡터 $E_i$)가 요구됨

- 그래서 multi-tasking training이 synset과 lemma(그리고 $g$ functions를 위한 좋은 parameters)에 대해 공동으로 좋은 임베딩을 학습하려고 시도함

Multi-Task Training

4.1 Multiple Data Resources

가능한 한 많은 상식(common-sense knowledge)을 모델에 부여하기 위해서 여러 다른 종류들로 이뤄진 데이터 소스들을 결합해서 사용함

- 1) WordNet v3.0 (WN)

- 메인 리소스

- WordNet은 synset 간의 relation만 가지고 있는데 disambiguation process를 위해서는 synset과 lemma에 대한 임베딩이 필요함

- 그래서 lemma 임베딩 또한 학습시키기 위해 두 가지 다른 버전의 데이터 셋을 만듦

- “Ambiguated” WN

- 각 triplet의 synset entities가 해당되는 lemma 중 하나로 대체됨

- 그래서 lemma를 유의어(synonym)로 대체하는 것과 유사한 많은 예제들로 모델을 훈련함

- “Bridge” WN

- 모델에 synset과 lemma 임베딩 간의 연결에 대해 학습시키도록 설계됨

- relation 튜플에서 $lhs$ 또는 $rhs$ synset은 해당되는 lemma로 대체됨 (다른 argument는 synset으로 유지됨)

- “Ambiguated” WN

- 221,017 triplets

- → val셋: 5,000 triplets / test셋: 5,000 triplets

- 2) ConceptNet v2.1 (CN)

- 상식(common-sense knowledge) 기반

- lemma 또는 lemma 그룹들이 풍부한 semantic relations(의미 관계)와 연결되어 있음

- synset이 아닌 lemma를 기반으로 하기 때문에 서로 다른 단어 의미의 차이를 구분하지 않음

- WN 사전의 lemma를 포함하는 triplet만 사용함

- 11,332 training triplets

- 3) Wikipedia (Wk)

- 단순히 비지도 방식으로 모델에 지식(knowledge)을 제공하기 위한 원시 텍스트로 사용

- 5만 개의 기사로 3백만 개 이상의 예제 생성

- 4) EXtended WordNet (XWN)

- WordNet glosses(→ definitions)로부터 구축되고, 구문 분석(syntactically parsed)되었고, WN synset에 의미적으로 연결된 content word들로 구성

- 776,105 training triplets

- val셋: 10,000 triplets

- 5) Unambiguous Wikipedia (Wku)

- lemma 중 하나가 명확하게 synset에 해당하고, 이 synset이 다른 ambiguous(모호한) lemma에 매핑되면 unambiguous(모호하지 않은) lemma를 ambiguous lemma로 대체해서 새로운 triplet을 생성함

- -> 이 방식으로 수정된 Wikipedia 말뭉치에서 추출한 triplet으로 train셋을 추가적으로 만듦

- 저 방식을 사용하면 모호한 context에서 true synset을 알 수 있음

- 981,841 supervision triplets

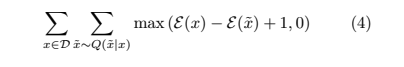

4.2 Training Algorithmenergy function

- $ε$의 parameter를 학습시키기 위해서 모든 훈련 데이터 리소스를 반복했고, 확률적 경사하강법(stochastic gradient descent)을 사용했음

- 확률적 경사하강법(stochastic gradient descent, SGD)

- 조금만 훑어보고(Mini batch) 빠르게 가보자

- 확률적 경사하강법(stochastic gradient descent, SGD)

다음 단계에 따라 학습을 반복시킴

- 1. 위의 예제 소스 중 하나에서 무작위로 positive training triplet $x_i$를 선택 (synset, lemma 또는 둘 다로 구성된 triplet)

- 2. 제약 조건(constraint) (1), (2), (3) 중 무작위로 선택

- 3. $lhs_{xi}$, $rel_{xi}$ 또는 $rhs_{xi}$를 각각 대체하기 위해 모든 entity $C$ 셋에서 entity를 샘플링해서 negative triplet $\tilde{x}$를 만듦

- 4. $ε(x_i) > ε(\tilde{x}) − 1$ 이면 기준(criterion) (4)를 최소화하기 위해서 확률적 경사하강법(stochastic gradient descent, SGD) 단계를 수행

- 5. 각각의 임베딩 벡터가 정규화된다는 제약 조건(constraint)을 적용. $||E_i|| = 1$, $∀i$

- 경사하강 단계에서는 $λ$의 학습률이 요구됨

- 위의 알고리즘은 XWN와 Wku 데이터를 제외한 모든 데이터에 적용함

- entity의 모든 representation을 포함하는 행렬 $E$는 복잡한 multi-task learning 절차를 통해 학습됨

- -> 모든 relation과 모든 데이터 소스에 대해 단일 임베딩 행렬이 사용되기 때문

- 그 결과, entity의 임베딩에는 entity가 $lhs$, $rhs$ 또는 $rel$ (동사의 경우)로 포함되어 있는 모든 relation과 데이터 소스에서 오는 인수분해된(factorized) 정보가 포함됨

- 모델은 각 entity에 대해 다른 entity들과 다양한 방식으로 상호 작용하는 방법을 학습하도록 강요됨

Experiments

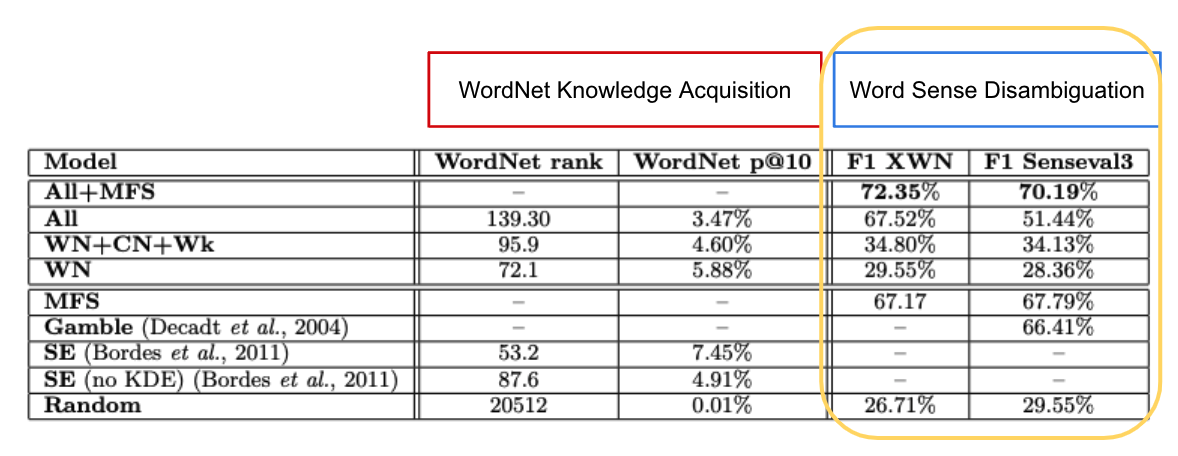

6.1 Benchmarks

- benchmark

- 여러 실험 또는 모델의 성능을 비교할 수 있는 표준

- https://ifdean.tistory.com/3

- multi-task joint training과 다양한 데이터 소스로 수행된 모델들의 성능을 평가하기 위해 두 가지 벤치마크 task에서 데이터 소스의 여러 조합들로 학습된 모델들을 평가함

- WordNet knowledge encoding

- WSD(Word Sense Disambiguation)

- WN: WordNet으로만 학습된 모델 → “Ambiguated” WordNet과 “Bridge” WordNet

- WN+CN+Wk: WordNet, ConceptNet, Wikipedia 데이터로 학습된 모델

- All: 모든 데이터 소스로 학습된 모델

- MFS: Most Frequent Sense 사용, WordNet frequency(빈도)를 기반으로 함

- All+MFS: 성능이 가장 좋게 나온 모델

- SE (Bordeset al., 2011) : Structured Embeddings

- SE 모델에 대한 설명 참고 -> https://velog.io/@raqoon886/StructuredEmbeddings

1) Knowledge Acquisition

- 주어진 지식(knowledge → training relations)에서 새로운 relation을 일반화(generalize)할 수 있는 능력은 다음 절차로 측정됨

- 각각의 test WordNet triplet에 대해 왼쪽 또는 오른쪽 entity가 제거되고, 각각 차례차례 사전(dictionary)의 41,024개의 synset으로 대체됨

- 이 triplet들의 에너지는 모델에 의해 계산되고, 오름차순으로 정렬되며 올바른 synset의 순위(rank)가 저장됨

- 그런 다음 평균 예측 순위(→ 해당 순위들의 평균), WordNet 순위와 precision@10( = p@10 → 1과 10 내에 있는 순위의 비율을 10으로 나눈 값), WordNet p@10을 측정

- P@10 = Precision at 10

- precision: 정밀도

- -> 모델이 True라고 분류한 것 중 실제 True인 것의 비율

- Precision at K

- -> Top K개의 결과로 Precision(정밀도)를 계산

- generalize: 일반화

- 학습된 모델이 다른 새로운 데이터에 관해서도 잘 작동하도록 하는 것

- https://glanceyes.tistory.com/entry/Deep-Learning-%EC%B5%9C%EC%A0%81%ED%99%94Optimization

- WordNet으로만 학습된 모델(WN)의 성능은 SE보다 살짝 낮음

- SE (Bordes et al. (2011))는 예측을 개선하기 위해서 structured embeddings 위에 KDE(Kernel Density Estimator)를 쌓음

- Kernel Density Estimation(KDE)

- 커널 함수(kernel function)를 이용한 밀도 추정 방법

- https://seongkyun.github.io/study/2019/02/03/KDE/

- Kernel Density Estimation(KDE)

- KDE가 없는 SE (no KDE) (Bordes et al., 2011)와 비교했을 때는 WN의 성능이 더 높음

- 다른 데이터와 multi-tasking한 WN+CN+Wk 모델과 All 모델은 WordNet만 학습시킨 WN 모델보다 성능이 조금 떨어지지만 그래도 WordNet knowledge를 잘 인코딩함

- 원시 텍스트로 multi-tasking했을 때, relation type의 개수는 18개에서 수천 개로 늘어남

- 모델은 너무 많은 relation으로 인해서 더 복잡한 유사성(similarity)을 학습함

- → text relation을 추가하면 WordNet에서 지식(knowledge)을 추출하는 문제가 더 어려워짐

- 이러한 저하 효과는 위 이미지에 나와있는 순위가 41,024개 이상의 entity에 대한 것이라는 점을 염두에 두고 보면 성능이 여전히 매우 우수한 편이더라도 multi-tasking process의 제한 사항(limitation)이라고 할 수 있음

- 게다가 이는 WSD와 semantic parsing에 중요한 여러 training 소스들을 결합하는 기능을 제공함

2) Word Sense Disambiguation(WSD)

- WSD에 대한 성능은 두 가지 test셋에서 평가됨

- XWN test셋

- SensEval-3의 English All-words WSD task의 일부

- cf) SensEval-3

- SensEval-3 데이터에 대해서는 앞에서 설명된 Inference Procedure(추론 절차)를 사용해 원본 데이터를 처리하고, 모든 lemma가 WordNet에서 정의한 어휘에 속하는 triplet(주어, 동사, 직접 목적어)만 유지

- F1 score로 측정

- WN 모델과 WN+CN+Wk 모델의 차이점은 direct supervision 없이도 모델이 텍스트에서 의미 있는 정보를 추출해 일부 단어를 disambiguate 할 수 있는 것 (WN+CN+Wk 모델이 Random 모델과 WN 모델보다 성능이 훨씬 높음)

- All+MFS 모델이 시도했던 모든 방법들 중에서 제일 높은 성능을 달성

6.2 Representations

1) Entity Embeddings

- -> All 모델에 의해 정의된 임베딩 공간에서 몇몇 entity에 대한 가장 가까운 이웃들

- 예상했던 대로, 이웃들은 lemma와 synset의 혼합으로 구성됨

- lemma에 해당하는 이웃은 다른 generic(포괄적인) lemma들로 구성되는 반면, 두 개의 다른 synsets에 대한 이웃은 주로 분명히 다른 의미를 가진 synsets로 구성됨

- 두 번째 행은 common lemmas (첫 번째 열)의 경우 이웃 또한 generic(포괄적인) lemma이지만, precise ones (두 번째 열)는 예리한 의미를 정의하는 synset에 가까움

- _different_JJ_1에 대한 이웃 리스트(세 번째 열)는 학습된 임베딩이 antonymy(반의성 → 반의어)을 인코딩하지 않음을 나타냄

2) WordNet Enrichment

- WordNet과 ConceptNet은 제한된 개수의 relation type을 사용하기 때문에 (→ 20개 미만, ex. _has_part, _hypernym) 대부분의 동사를 relation으로 간주하지 않음

- multi-task training과 MR, WordNet/ConceptNet의 relation에 대한 통합된 representation 덕분에 모델이 잠재적으로 WordNet에 존재하지 않는 그러한 relation로 일반화가 가능함

- -> 두 knowledge bases(WordNet과 ConceptNet)에 존재하지 않는 relation type에 대한 예측된 synset 리스트

- TextRunner (Yates et al., 2007) : 논문에서 사용한 50,000개의 Wikipedia 기사와 비교하기 위해 1억 개의 웹페이지에서 정보를 추출한 정보 추출 도구

- 논문의 All 모델과 TextRunner의 결과 모두 상식을 반영하는 것처럼 보임

- 하지만 논문의 All 모델과 달리 TextRunner는 lemma의 다른 의미를 disambiguate하지 않으므로 그 지식을 기존 리소스에 연결해서 풍부하게(enrich) 만들지 못함

Conclusion

- 이 논문은 원시 텍스트를 명확한(disambiguated) MR에 매핑하는 semantic parsing을 위한 대규모 시스템을 제시함

- key contributions

- 모호한 lemma와 모호하지 않은 entities(synsets) 사이의 관계들(relation)의 triplet을 평가하는 energy-based model

- 상대적으로 제한된 supervision으로 원시 텍스트에서 명확한(disambiguated) MRs를 구축하는 방법을 효과적으로 학습할 수 있도록 여러 리소스를 통해 모델의 학습을 multi-tasking한 것

- 최종 시스템은 여러 리소스에 대한 지식을 일반화하고 이를 원시 텍스트에 연결하는 것을 통해서 energy function 안에서 문장의 깊은 의미를 잠재적으로 포착할 수 있음

참고 자료

https://kilian.evang.name/sp/lectures/intro.pdf

Meaning Representation and SRL: assuming there is some meaning

What is meaning Representation

towardsdatascience.com

https://excelsior-cjh.tistory.com/64

Chap01-2 : WordNet, Part-Of-Speech(POS)

1. Looking up Synsets for a word in WordNet WordNet(워드넷)은 영어의 의미 어휘목록이다. WordNet은 영어 단어를 'synset'이라는 유의어 집단(동의어 집합)으로 분류하여 간략하고 일반적인 정의를 제공하고,..

excelsior-cjh.tistory.com

https://velog.io/@riverdeer/Multi-task-Learning

Multi-task Learning

Multi-task Learning에 대한 여러 자료를 모아놓은 포스팅입니다.

velog.io

https://en.wikipedia.org/wiki/Knowledge_acquisition

Knowledge acquisition - Wikipedia

Process used to define the rules and ontologies required for a knowledge-based system Knowledge acquisition is the process used to define the rules and ontologies required for a knowledge-based system. The phrase was first used in conjunction with expert s

en.wikipedia.org

https://bab2min.tistory.com/576

단어 의미 중의성 해소(Word Sense Disambiguation) 기술들

언어에는 다른 단어이지만 형태가 같은 동철이의어(또는 소리가 같지만 다른 단어인 동음이의어)도 많습니다. 그리고 같은 단어라 할지라도 맥락에 따라 쓰이는 의미가 다릅니다. 따라서 해당

bab2min.tistory.com

https://post.naver.com/viewer/postView.naver?volumeNo=31743752&memberNo=52249799

[ICLR 2021] 2편: ICLR 2021 속 Generative model 트렌드

[BY LG AI연구원] 메타버스를 이용한 온라인 학회이번 ICLR 2021은 코로나로 인해 작년과 같이 virtual ...

m.post.naver.com

Exploiting links in WordNet hierarchy for word sense disambiguation of nouns | Semantic Scholar

Sense's definitions of the specific word, "Synset" definitions, the "Hypernymy" relation, and definitions ofThe context features (words in the same sentence) are retrieved from the WordNet database and used as an input of the Disambiguation algorithm. Word

www.semanticscholar.org

https://medium.com/sciforce/text-preprocessing-for-nlp-and-machine-learning-tasks-3e077aa4946e

Text Preprocessing for NLP and Machine Learning Tasks

We go into detail of text preprocessing for NLP. We talk about such steps as segmentation, cleaning, normalization, annotation and analysis.

medium.com

https://byteiota.com/pos-tagging/

Part Of Speech Tagging – POS Tagging in NLP | byteiota

Part of Speech Tagging deals with automatic assignment of POS tag to the words in a given sentence. POS tagging is achieved using NLP techniques.

byteiota.com

https://jynee.github.io/NLP%EA%B8%B0%EC%B4%88_3/

(NLP 기초) 문서 정보 추출

NLP 정규표현식 청킹 칭킹 문서 정보 추출 정해진 패턴을 사용해서 패턴에 일치하는 데이터 검색을 지원하는 표현식 정규표현식에 쓰이는 특수문자 : 아무 문자나 여러 개 : } { 안의 내용 제외 =

jynee.github.io

https://paperswithcode.com/task/semantic-role-labeling

Papers with Code - Semantic Role Labeling

Semantic role labeling aims to model the predicate-argument structure of a sentence and is often described as answering "Who did what to whom". BIO notation is typically used for semantic role labeling. Example: | Housing | starts | are | expected | to | q

paperswithcode.com

탐욕법(그리디) 알고리즘

탐욕법(이하 '그리디') 알고리즘이란 현재 상황에서 가장 좋은 것(최선의 선택)을 고르는 알고리즘을 말합니다. 그리디 알고리즘은 동적 프로그래밍을 간단한 문제 해결에 사용하면 지나치게 많

velog.io

https://seamless.tistory.com/38

딥러닝(Deep learning) 살펴보기 2탄

지난 포스트에 Deep learning 살펴보기 1탄을 통해 딥러닝의 개요와 뉴럴 네트워크, 그리고 Underfitting의 문제점과 해결방법에 관해 알아보았습니다. 그럼 오늘은 이어서 Deep learning에서 학습이 느린

seamless.tistory.com

NLP 벤치마크 데이터셋 - 영어와 한국어

자연어처리 태스크에 활용되는 주요 벤치마크 데이터셋을 소개합니다. 여러 언어권에서 데이터가 구축되고 있는데, 그 중에서도 영어와 한국어를 대상으로 알아봅니다. ##참고 아래는 구문분석

ifdean.tistory.com

https://velog.io/@raqoon886/StructuredEmbeddings

SE 논문 리뷰 - Learning Structured Embeddings of Knowledge Bases

해당 논문은 Knowledge Base의 Structured Embedding 방법에 관한 글이다.

velog.io

https://glanceyes.tistory.com/entry/Deep-Learning-%EC%B5%9C%EC%A0%81%ED%99%94Optimization

딥 러닝에서의 일반화(Generalization)와 최적화(Optimization)

2022년 2월 7일(월)부터 11일(금)까지 네이버 부스트캠프(boostcamp) AI Tech 강의를 들으면서 개인적으로 중요하다고 생각되거나 짚고 넘어가야 할 핵심 내용들만 간단하게 메모한 내용입니다. 틀리거

glanceyes.tistory.com

https://ddiri01.tistory.com/321

precision at K, MAP, recall at K

ranking system 또는 recommander 시스템에서 좋은 추천(랭크)를 했는지 평가하는 방법으로 precision at K, recall at K 을 살펴보자. Top K개의 결과로 Precision(정밀도)를 계산 -> Precision at K 추천 된 결..

ddiri01.tistory.com

https://seongkyun.github.io/study/2019/02/03/KDE/

Kernel Density Estimation (커널 밀도 추정) · Seongkyun Han's blog

Kernel Density Estimation (커널 밀도 추정) 03 Feb 2019 | kernel density estimation KDE 커널 밀도 추정 Kernel Density Estimation (커널 밀도 추정) CNN을 이용한 실험을 했는데 직관적으로는 결과가 좋아졌지만 왜 좋아

seongkyun.github.io

https://wdprogrammer.tistory.com/35

[NLP] 자연어 처리를 위한 필수 개념 정리: Language model, Representation

2018-01-20-nlp-1 Language Model(언어 모델) [정의] 단어 시퀀스에 대한 확률 분포로, 시퀀스1 내 단어 토큰들에 대한 확률을 할당하는 모델이다. m개의 단어가 주어질 때, m개의 단어 시퀀스가 나타날 확

wdprogrammer.tistory.com

'📑 논문 리뷰 > NLP' 카테고리의 다른 글

| [Word2Vec] Distributed Representations of Words and Phrases and their Compositionality (0) | 2022.08.11 |

|---|

소중한 공감 감사합니다 :)