[Word2Vec] Distributed Representations of Words and Phrases and their Compositionality

2022. 8. 11. 00:10

- -

Word2Vec의 Skip-gram 모델

Distributed Representations of Words and Phrases and their Compositionality

💡들어가기 전 개념 정리

- Distributed Representation(분포 기반의 단어 표현)

- '비슷한 위치에서 등장하는 단어들은 비슷한 의미를 가진다'라는 분포 가설에 기반해 주변 단어 분포를 기준으로 단어의 벡터 표현이 결정되기 때문에 분산 표현(Distributed representation)이라고 부름

- cf) 원-핫 인코딩(One-hot Encoding)

- 범주형(categorical) 변수를 벡터화

- ex) [1 0 0 0], [0 1 0 0], [0 0 1 0], [0 0 0 1]

- 단점: 코사인 유사도 값이 0 => 단어 사이 관계 파악 X, 차원이 너무 커짐

- 임베딩(Embedding)

- 원핫 인코딩 단점 해결 -> 단어 사이 관계 파악 가능

- 단어를 고정된 길이의 차원으로 벡터화 => 벡터가 아닌 것을 고정 길이의 벡터로 나타내기

- 벡터 내 각 요소가 연속적인 값을 가짐 -> ex) [0.04227, -0.0033, 0.1607, -0.0236, ...]

- Word2Vec

- 단어를 벡터로 나타내는 방법

- 가장 많이 사용되는 임베딩 방법

- 특정 단어 기준 양 옆의 두 단어 (window size = 2)의 관계 이용

- -> 분포 가설을 잘 반영함

- 벡터화하고자 하는 타겟 단어(Target word)의 표현이 해당 단어 주변 단어에 의해 결정됨

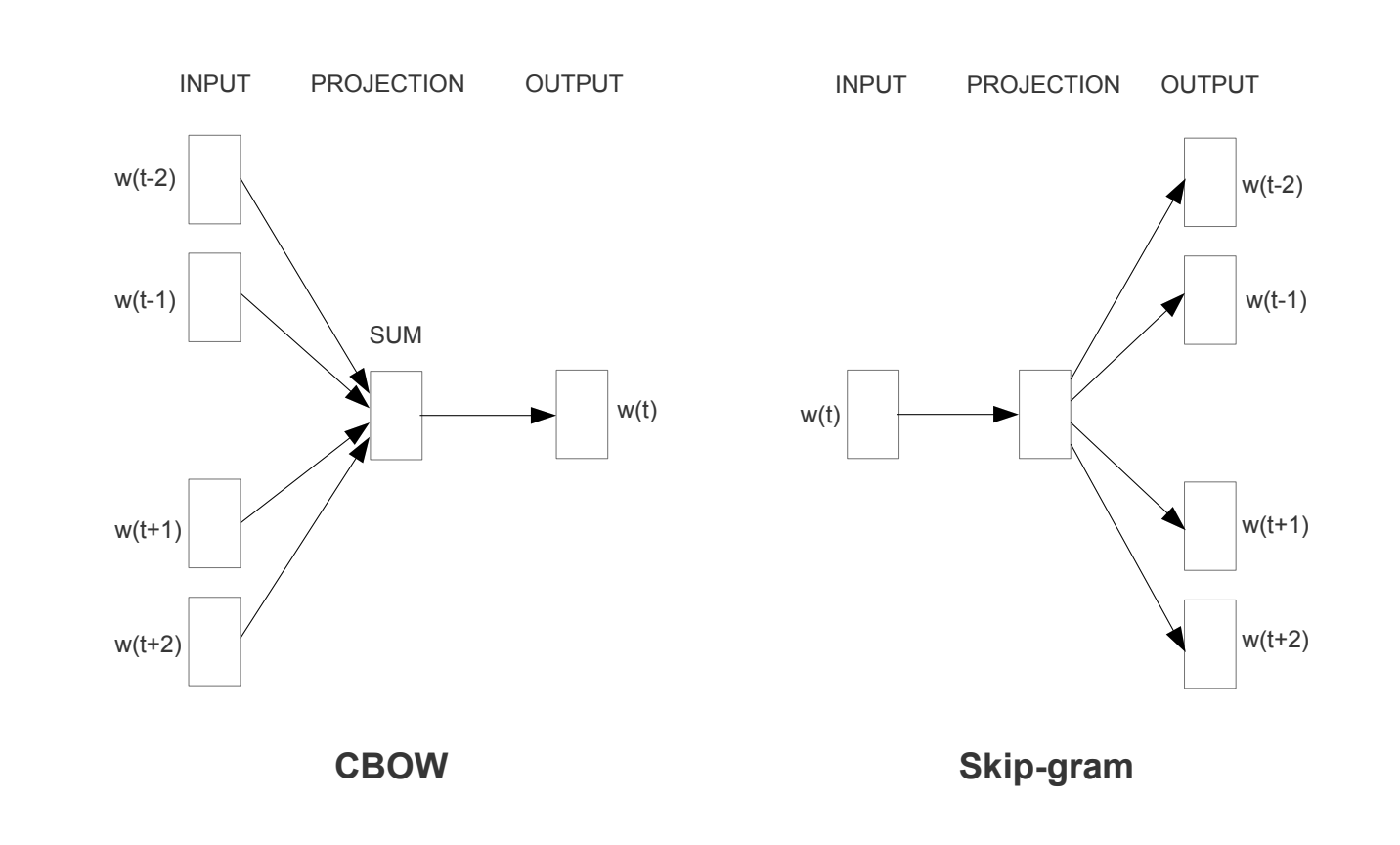

- CBoW(Continuous Bag-of-Words)

- 주변 단어 -> 중심 단어 예측

- Skip-gram

- 중심 단어 -> 주변 단어 예측

- CBoW보다 성능 좋음 -> 역전파 과정에서 학습이 많아서 => 가중치가 더 유의미한 값을 가지게 됨

- 단점: 계산량이 많음 -> 리소스 큼 (= 비용 증가)

Abstract

- 논문에서는 Skip-gram 모델에 대해 벡터의 품질과 학습 속도를 증진시킨 몇 가지 extension들을 제시

- subsampling

- negative sampling

- 자주 등장하는 단어를 subsampling함으로써 눈에 띄게 빨라진 학습 속도와 regular word representations를 더 많이 학습할 수 있었음

- hierarchical softmax(계층적 소프트맥스)의 간단한 대안인 negative sampling 제시

- word representation의 한계는 단어 순서에 대한 무관심과 관용구(idiomatic phrase)를 표현할 수 없다는 것

- ex) "Canada"와 "Air"의 의미를 결합해서 "Air Canada"라는 단어를 쉽게 얻지 못함

- 이 예시에서 착안해 논문에서는 텍스트에서 구(phrase)를 찾는 간단한 방법을 제시하고, 수백만 개의 구에 대한 좋은 벡터 표현(vector representation)을 학습하는 것이 가능하다는 것을 보여줌

Introduction

- Skip-gram 모델: 대량의 비정형 텍스트 데이터에서 단어의 고품질 vector representations를 학습하는 효율적인 방법

- Skip-gram 모델의 훈련 목표는 주변 단어를 잘 예측하는 단어 벡터 표현(word vector representations)을 학습하는 것

- word vector를 학습하기 위해 이전에 사용된 대부분의 neural network architectures와 달리 Skip-gram 모델의 훈련(training)에는 조밀한 행렬 곱셈이 포함되지 않음

- → 이로 인해 훈련이 아주 효율적이게 됨 (최적화된 single-machine implementation으로 하루에 1000억 개 이상의 단어 학습 가능)

- 논문에서는 오리지널 Skip-gram 모델에 대한 몇가지 extension들을 제시

- 훈련 중 자주 등장하는 단어들에 대한 subsampling을 사용하면 속도가 크게 향상되었고 (약 2배에서 10배까지) 덜 자주 등장하는 단어에 대한 representations의 정확도가 향상된 것을 확인

- Skip-gram 모델 훈련을 위한 Noise Contrastive Estimation (NCE)의 단순화된 변형을 제시

- -> 이전 작업에 사용된 더 복잡한 hierarchical softmax에 비해서 자주 등장하는 단어들에 대해 더 빠른 훈련과 더 나은 vector representation이 가능해짐

- Noise Contrastive Estimation (NCE)

- CBOW와 Skip-Gram 모델에서 사용하는 비용 계산 알고리즘

- 전체 데이터셋에 대해 softmax 함수를 적용하는 것이 아니라 샘플링으로 추출한 일부에 대해서만 적용

- 기본 알고리즘: k개의 대비되는 단어들을 noise distribution에서 구해서 (몬테카를로) 평균을 구함

- Hierarchical softmax, Negative Sampling 등 여러 가지 방법 존재

- 일반적으로 단어의 개수가 많을 때 사용

- NCE를 사용하면 문제를 <실제 분포에서 얻은 샘플>과 <인공적으로 만든 잡음 분포(noise distribution)에서 얻은 샘플>을 구별하는 이진 분류 문제로 바꿀 수 있게 됨

- Negative Sampling에서 사용하는 목적 함수는 결과값이 최대화될 수 있는 형태로 구성

- 현재(= 목표, target, positive) 단어에는 높은 확률을 부여, 나머지 단어(= negative, noise)에는 낮은 확률을 부여해서 가장 큰 값을 만들 수 있는 공식 사용

- 계산 비용에서 전체 단어 V를 계산하는 것이 아니라 선택한 k개의 noise 단어들만 계산하면 되기 때문에 효율적

- 텐서플로우 -> tf.nn.nce_loss()에 구현

- Noise Contrastive Estimation (NCE)

- word representation는 개별 단어의 구성이 아닌 관용구(idiomatic phrases)를 표현할 수 없다는 한계를 가짐

- ex) “Boston Globe(: 미국의 일간 신문)”는 신문을 뜻하지 “Boston”과 “Globe”라는 단어의 의미가 결합된 것이 아님

- 따라서 전체 구(phrases)를 표현하기 위해 벡터를 사용하면 Skip-gram 모델의 표현력이 훨씬 더 좋아짐

- 단어 기반(word based) 모델에서 구문 기반(phrase based) 모델로의 확장(extension)은 비교적 간단함

- 1) 데이터 기반 접근 방식을 사용해 많은 수의 phrase를 식별

- 2) 2) 훈련 중 phrase를 개별 토큰으로 처리

- phrase vector의 품질을 평가하기 위해서 단어와 구를 모두 포함하는 analogical reasoning tasks의 test셋을 개발함

- Analogical Reasoning Task

- 어떤 단어의 pair(쌍), 예를 들어 "(Athens, Greece)" 라는 pair가 주어졌을 때, 다른 단어 “Oslo”를 주면 이 관계에 상응하는 다른 단어를 제시하는 방식의 시험

- Analogical Reasoning Task

- test셋의 일반적인 유추 쌍(analogy pair)

- “Montreal”:“Montreal Canadiens”::“Toronto”:“Toronto Maple Leafs”.

- vec("Montreal Canadiens") - vec("Montreal") + vec("Toronto")에 가장 가까운 representation이 vec("Toronto Maple Leafs")인 경우 올바르게 응답된 것으로 간주됨

- simple vector addition이 종종 의미 있는 결과를 생성할 수 있다는 것을 발견

- ex) vec(“Russia”) + vec(“river”)는 vec(“Volga River”)와 가까움

- vec(“Germany”) + vec(“capital”)는 vec(“Berlin”)과 가까움

- 이 복합성(compositionality)은 word vector representations에 기본 수학적 연산을 사용해서 명확하지 않은 수준의 언어 이해(language understanding)를 얻을 수 있음을 시사함

2.1 Hierarchical Softmax

- full softmax의 계산적으로 효율적인 근사치가 hierarchical softmax

- 이 방법의 주된 이점은 확률분포를 얻기 위해 신경망에서 $W$(vocabulary 내 word의 수)개의 output node를 평가하는 대신 $log_2(W)$ nodes에 대해서만 평가한다는 것

- hierarchical softmax는 이진 트리를 이용해서 $W$의 output layer를 표현함

- 이때 트리의 각 노드의 leaf는 child node의 확률과 관련됨

- 이는 단어의 임의의 확률을 정의하게 해줌

- hierarchical softmax에서 사용하는 트리의 구조는 성능에 상당한 영향을 미침

- 논문에서는 binary Huffman tree를 사용했는데 자주 등장하는 단어에 short codes를 할당하기 때문에 훈련을 빠르게 진행할 수 있었음

- Hierarchical Softmax

- 기존 softmax의 계산량을 현격히 줄인 softmax에 근사시키는 방법론

- Word2Vec에서 skip-gram방법으로 모델을 훈련시킬 때 negative sampling과 함께 쓰임

- Huffman tree

- Word2Vec에서 vocabulary에 있는 모든 단어들을 잎으로 갖는 Huffman tree를 만듦

- Huffman tree는 데이터의 등장 빈도에 따라 배치하는 깊이가 달라지는 이진 트리

- Word2Vec에서는 자주 등장하는 단어(frequent word)는 얕게, 가끔 등장하는 단어(rare word)는 깊게 배치함

- 모든 노드에 대해 확률을 다 더하면 1이 나오므로 확률분포를 이루고, 이 확률분포를 이용하면 일반적인 소프트맥스처럼 활용 가능

2.2 Negative Sampling

- hierarchical softmax의 대안이 Noise Contrastive Estimation(NCE)

- NCE는 logistic regression(로지스틱 회귀)의 평균을 통해 노이즈와 데이터를 구별할 수 있는 게 좋은 모델이라고 가정

- NCE는 softmax의 로그확률을 근사하게(approximately) 최대화하하려 하지만 Skip-gram 모델은 오직 고품질 vector representations를 학습하는 것을 목표로 함

- 따라서 논문에서는 vector representations의 품질이 유지되는 한 NCE를 단순화할 수 있었음

- Negative sampling과 NCE의 주요 차이점은 NCE는 샘플과 노이즈 분포의 수치적 확률이 모두 필요하지만 negative sampling은 샘플만 사용한다는 것

- 위 이미지는 훈련 중에 수도가 의미하는 바에 대한 supervised information를 제공하지 않았음에도 개념을 자동으로 구성하고 개념 간의 관계를 암묵적으로 학습한 모델의 능력을 보여줌

- Negative Sampling

- Word2Vec 모델의 마지막 단계에서 출력층 Layer에 있는 softmax 함수는 사전 크기 만큼의 Vector의 모든 값을 0과 1사이의 값이면서 모두 더하면 1이 되도록 바꾸는 작업을 수행

- 이에 대한 오차를 구하고, 역전파를 통해 모든 단어에 대한 임베딩을 조정

- 그 단어가 기준 단어나 문맥 단어와 전혀 상관 없는 단어라도 마찬가지로 진행함

- → 사전의 크기가 수백만에 달한다면, 이 작업은 굉장히 무거운 작업이 됨

- 이를 조금 더 효율적으로 진행하기 위해 임베딩 조절시 사전에 있는 전체 단어 집합이 아닌, 일부 단어 집합만 조정하는 것이 Negative Sampling

- 이 일부 단어 집합은, positive sample(기준 단어 주변에 등장한 단어)와 negative sample(기준 단어 주변에 등장하지 않은 단어)로 이루어짐

- ⇒ 기준 단어와 관련된 parameter들은 다 업데이트 해주는데 관련되지 않은 parameter들은 몇 개 뽑아서 업데이트 해주겠다는 것

- 이 때, 몇 개의 negative sample을 뽑을지는 모델에 따라 다르고 보통 문맥 단어 개수 + 20개를 뽑음

- 또한, 말뭉치에서 빈도수가 높은 단어가 뽑히도록 설계되어있음

2.3 Subsampling of Frequent Words

- 큰 규모의 말뭉치에서는 가장 자주 등장하는 단어들(ex. "in", "the", "a")이 수억 번 나타날 수 있지만 일반적으로 이러한 frequent words는 rare words보다 정보의 가치가 적음

- 예를 들어서 Skip-gram 모델은 “France”와 “the”의 동시 발생을 관찰하는 것보다 "France"와 "Paris"의 동시 발생을 관찰함으로써 더 많은 이점을 얻음 -> 왜냐면 거의 모든 단어가 문장 내에서 “the”랑 함께 나타나기 때문

- 자주 등장하는 단어의 vector representations는 수백만 개의 예제를 학습한 후에도 크게 변하지 않음

- 논문에서는 rare words와 frequent words 사이의 불균형에 대응하기 위해 간단한 subsampling 접근 방식을 사용함

- train셋의 각 단어 $w_i$는 아래의 공식에 의해 계산된 확률로 버려짐

- $P(w_i)$: 단어 빈도 $f(w_i)$에 따라서 이 값이 높은 단어를 누락시키는 확률

- $f(w_i)$: 단어 $w_i$의 빈도

- $t$: 선택된 임계값(threshold), 일반적으로 약 $10^{−5}$

- 이 subsampling 공식은 빈도의 순위를 유지하면서 빈도가 $t$(선택된 임계값)보다 큰 단어를 적극적으로 subsample함

- 이 방식은 학습 속도를 가속화하고, rare words에 대해 학습된 벡터의 정확도를 크게 향상시킴

- Subsampling

- 자주 등장하는 단어를 학습 대상에서 제외하는 방법

- stop words(불용어) 제거에 유용

-> 수식에 대한 설명 참고

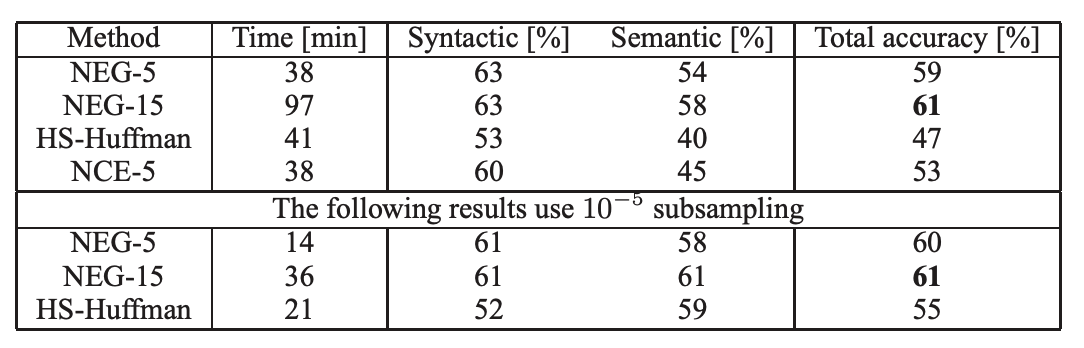

Empirical Results(실증적 결과)

- Hierarchical Softmax(HS), Noise Contrastive Estimation, Negative Sampling, 훈련 단어에 대한 subsampling에 대해 평가함

- analogical reasoning task 이용

- task는 “Germany” : “Berlin” :: “France” : ? 와 같은 유추(analogies)로 구성됨

- 그 유추들은 코사인 거리(cosine distance)에 따라 vec("Berlin") - vec("Germany”) + vec(“France”)에 가장 가까운 vec(x)를 찾는 것으로 해결됨 → x가 “Paris”이면 정답

- task는 2가지 카테고리로 나눠짐

- syntactic analogies → “quick” : “quickly” :: “slow” : “slowly”

- semantic analogies → country - capital city relationship

- Skip-gram 모델을 훈련하기 위해서 다양한 뉴스 기사로 구성된 대규모 데이터셋(10억 개의 단어가 포함된 Google 데이터셋)을 사용함

- train 데이터에서 5회 미만으로 발생한 모든 단어를 vocabulary에서 삭제 → vocabulary 크기: 69만 2천

- NEG-$k$: 각 positive sample에 대해 $k$개의 negative samples를 사용한 Negative Sampling

- NCE: Noise Contrastive Estimation

- HS-Huffman: Hierarchical Softmax + frequency-based Huffman codes

- analogical reasoning task에서 Negative Sampling이 Hierarchical Softmax보다 성능이 뛰어났고, 심지어 NCE보다 성능이 살짝 더 높았음

- frequent words에 대한 subsampling은 학습 속도를 몇 배 향상시켰고, word representations을 훨씬 더 정확하게 만들었음

- skip-gram 모델의 선형성(linearity)은 벡터를 linear analogical reasoning에 더 적합하게 만든다고 주장할 수 있음

- 하지만 위 결과는 매우 non-linear한 standard sigmoidal RNN에 의해 학습된 벡터들이 훈련 데이터의 양이 증가함에 따라 이 task에 대한 성능이 크게 개선되었음을 보여줌

- 이는 non-linear 모델도 word representations의 선형 구조(linear structure)를 선호한다는 것을 시사한다고 할 수 있음

Learning Phrases

- 구(phrase)의 의미는 단순히 개별 단어들 의미의 조합으로만 이루어지지 않음

- 논문에서는 구에 대한 vector representation을 학습하기 위해 먼저 함께 자주 등장하고 다른 맥락에서는 드물게 나타나는 단어들을 찾음

- ex) "New York Times", "Toronto Maple Leafs"는 train 데이터에서 고유한 토큰으로 대체되지만 bigram인 "this is"는 변경되지 않은 상태로 유지

- → 이렇게 하면 vocabulary 사이즈를 크게 늘리지 않고도 합리적인 구(phrase)를 많이 형성할 수 있음

- 구(phrase)가 유니그램(unigram)과 바이그램(bigram) 수를 기반으로 형성되는 심플한 data-driven approach 사용

- N-gram

- n개의 연속적인 단어 나열을 의미

- 갖고 있는 코퍼스에서 n개의 단어 뭉치 단위로 끊어서 이를 하나의 토큰으로 간주

- 유니그램(unigram): n = 1

- 바이그램(bigram): n = 2

- ex) “An adorable little boy is spreading smiles.”

- -> unigrams : an, adorable, little, boy, is, spreading, smiles

- -> bigrams : an adorable, adorable little, little boy, boy is, is spreading, spreading smiles

- N-gram

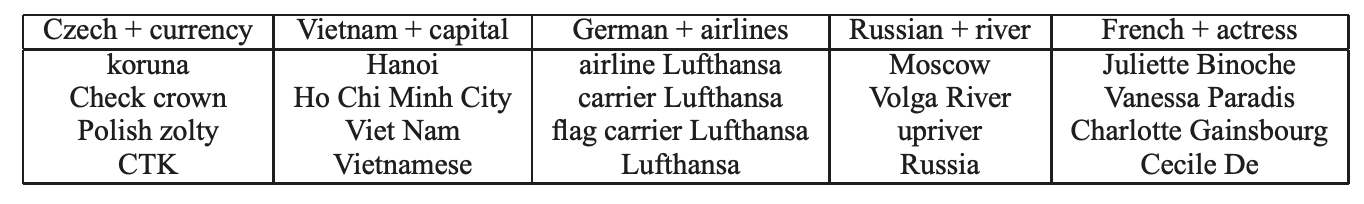

- 논문에서는 일반적으로 임계값(threshold value)을 낮추면서 train 데이터에 대해 2-4번의 패스를 실행해 여러 단어로 구성된 더 긴 구문(phrase)이 형성되도록 함

- 구를 포함하는 새로운 analogical reasoning task를 사용해서 phrase representations의 품질을 평가함

- 아래의 표는 이 task에 사용된 analogies의 5가지 카테고리 예시를 보여줌

- 목표: 처음 3개를 사용해 네 번째 구(phrase)를 계산하는 것

- 이 데이터셋에 대한 가장 좋은 성능을 낸 모델의 정확도: 72%

4.1 Phrase Skip-Gram Results

- 먼저 phrase 기반 training corpus를 구성한 다음 하이퍼파라미터를 다르게 해서 여러 Skip-gram 모델을 학습시킴

- 빈번한 토큰의 subsampling이 있거나 없는 Negative Sampling과 Hierarchical Softmax를 비교

- Negative Sampling이 k = 5에서도 상당한 정확도를 달성했지만 k = 15를 사용했을 때 훨씬 더 나은 성능을 달성함

- 놀랍게도 Hierarchical Softmax는 subsampling 없이 훈련되면 낮은 성능을 달성했지만, 자주 등장한 단어를 다운샘플링했을 때 가장 좋은 성능을 내는 방법이었음

- → subsampling이 훈련 속도를 더 빠르게 하고, 정확도도 향상시킨다고 할 수 있음

- phrase analogy task의 정확도를 극대화하기 위해서 약 330억 개의 단어 데이터셋을 사용해 train 데이터의 양을 늘림

- hierarchical softmax, 1000 차원, context에 대한 전체 문장을 사용함

- → 이 방법으로 모델의 정확도 72% 달성

- train 데이터셋의 사이즈를 줄이면 정확도가 66%로 떨어졌음

- → 많은 양의 train 데이터가 중요하다

- 아까 나왔던 결과처럼 hierarchical softmax와 subsampling을 같이 사용한 모델의 성능이 잘 나옴

Additive Compositionality

- Skip-gram representations가 vector representations에 대한 element-wise addition을 사용해서 단어를 의미 있게 결합할 수 있는 또 다른 종류의 선형 구조를 나타낸다는 것을 알아냄

- element-wise addition = 행렬 덧셈

- https://www.tutorialexample.com/element-wise-addition-explained-a-beginner-guide-machine-learning-tutorial/

Element-wise Addition Explained - A Beginner Guide - Machine Learning Tutorial

Element-wise addition is often used in machine learning, in this tutorial, we will introduce it for machine learning beginners.

www.tutorialexample.com

- 가장 성능이 좋은 Skip-gram 모델을 사용해 두 벡터의 합에 가장 가까운 4개의 토큰을 표시한 것

- word vectors가 문장에서 주변 단어를 예측하도록 학습되니까 벡터는 단어가 나타나는 context의 분포를 나타내는 것으로 볼 수 있음

- 이 값들은 output layer에서 계산된 확률과 대수적으로(logarithmically) 관련되어 있기 때문에 두 word vectors의 합은 두 context distributions의 곱과 관련됨

- 여기에서 곱은 AND function으로 작동함

- → 두 word vectors에 의해 높은 확률이 할당된 단어는 높은 확률을 가지고, 다른 단어는 낮은 확률을 가짐

- ex) "Volga River"가 "Russian"와 "river"라는 단어와 함께 같은 문장에서 자주 등장하면 "Russian"과 "river"의 단어 벡터 의 합은 "Volga River"의 벡터에 가까운 feature vector를 생성함

Comparison to Published Word Representations

- 비어있는 건 단어가 vocabulary에 없다는 의미

- 학습된 representations의 품질 면에서 규모가 큰 말뭉치(corpus)에 대해 학습된 큰 Skip-gram 모델이 다른 모델들보다 눈에 띄게 우수함

- 또한, Skip-gram 모델이 train 데이터셋의 양이 훨씬 더 많지만 학습 시간은 이전 모델들보다 훨씬 빠름

Conclusion

- 논문에서는 Skip-gram 모델을 사용해 단어와 구(phrase)의 distributed representations을 훈련하는 방법과 이러한 representations가 정확한 analogical reasoning(유추 추론)을 가능하게 하는 선형 구조를 보인다는 것을 보여줌

- 이 기술은 CBoW(continuous bag-of-words) 모델을 훈련하는 데에도 사용 가능

- CBoW vs Skip-gram

- 계산적으로 효율적인 모델 아키텍처 덕분에 이전 모델들보다 몇 배 더 많은 데이터로 모델을 성공적으로 훈련시킴

- 그 결과, 특히 rare entities에 대해 학습된 word representations와 phrase representations의 품질이 크게 향상됨

- 또한 자주 등장하는 단어들에 대한 subsampling이 훈련을 더 빠르게 하고, 흔하지 않은 단어를 훨씬 더 잘 표현한다는 것을 발견함

- 특히 자주 등장하는 단어들에 대해 정확한 표현(representations)을 학습하는 매우 간단한 훈련 방법인 Negative sampling 알고리즘이 논문의 또 다른 기여

- 훈련 알고리즘의 선택과 하이퍼파라미터의 선택은 task에 따라 결정되는 것

- → 문제마다 최적의 하이퍼파라미터 구성이 다르기 때문

- 논문에서는 모델 아키텍처의 선택, 벡터의 사이즈, subsampling rate, training window의 사이즈가 성능에 영향을 미친 가장 중요한 요소들이었음

- 논문 결과에서 단어 벡터(word vectors)가 simple vector addition을 사용해 다소 의미 있게 결합될 수 있다는 것이 아주 흥미롭다고 할 수 있음

- 논문에서 제시하는 phrase representations를 학습하기 위한 또 다른 접근 방식은 단순히 하나의 토큰으로 구(phrase)를 표현하는 것

- 이 두 가지 접근 방식의 조합은 계산 복잡성을 최소화하면서 텍스트의 더 긴 pieces를 표현하는 강력하면서도 간단한 방법을 제공함

- 따라서 논문의 모델은 recursive matrix-vector operations를 사용해 구(phrase)를 표현하려는 기존 접근 방식을 보완하는 것으로 볼 수 있음

참고 자료

https://pythonkim.tistory.com/92

Word2Vec 모델 기초 (1) - 개념 정리

챗봇을 만들어 볼 생각으로 많은 문서를 뒤졌다. 챗봇을 만드는 방법에는 여러 가지가 있지만, 대부분은 페이스북, 구글, 아마존, 마이크로소프트 등에서 제공하는 프레임웍을 사용하도록 되어

pythonkim.tistory.com

word2vec 관련 이론 정리

예전에 포스팅한 Kaggle ‘What’s Cooking?’ 대회에서 word2vec 기술을 살짝 응용해서 사용해볼 기회가 있었다. 그 이후에도 word2vec이 쓰일만한 토픽들을 접하면서 이쪽에 대해 공부를 해보다가, 기존

shuuki4.wordpress.com

https://uponthesky.tistory.com/15

계층적 소프트맥스(Hierarchical Softmax, HS) in word2vec

계층적 소프트맥스(Hierarchical Softmax, HS)란? 기존 softmax의 계산량을 현격히 줄인, softmax에 근사시키는 방법론이다. Word2Vec에서 skip-gram방법으로 모델을 훈련시킬 때 네거티브 샘플링(negative sampli..

uponthesky.tistory.com

https://wooono.tistory.com/244

[DL] Word2Vec, CBOW, Skip-Gram, Negative Sampling

One-Hot Vector 기존의 자연어처리 분야에서는, 단어를 One-Hot Vector 로 표현했습니다. One-Hot Vector란, 예를 들어 사전에 총 10000개의 단어가 있고, Man이라는 단어가 사전의 5391번째 index에 존재한다면 M..

wooono.tistory.com

https://yngie-c.github.io/nlp/2020/05/28/nlp_word2vec/

Word2Vec · Data Science

지난번 게시물에 있었던 확률기반 신경망 언어 모델(NPLM)은 처음으로 단어 임베딩을 언어 모델에 사용하였습니다. 이번에는 단어 임베딩에서 가장 많이 쓰이는 모델인 Word2Vec에 대해서 알아보겠

yngie-c.github.io

3) N-gram 언어 모델(N-gram Language Model)

n-gram 언어 모델은 여전히 카운트에 기반한 통계적 접근을 사용하고 있으므로 SLM의 일종입니다. 다만, 앞서 배운 언어 모델과는 달리 이전에 등장한 모든 단어를 고려하 ...

wikidocs.net

Element-wise Addition Explained - A Beginner Guide - Machine Learning Tutorial

Element-wise addition is often used in machine learning, in this tutorial, we will introduce it for machine learning beginners.

www.tutorialexample.com

'📑 논문 리뷰 > NLP' 카테고리의 다른 글

| Joint Learning of Words and Meaning Representations for Open-Text Semantic Parsing (0) | 2022.07.23 |

|---|

소중한 공감 감사합니다 :)